|

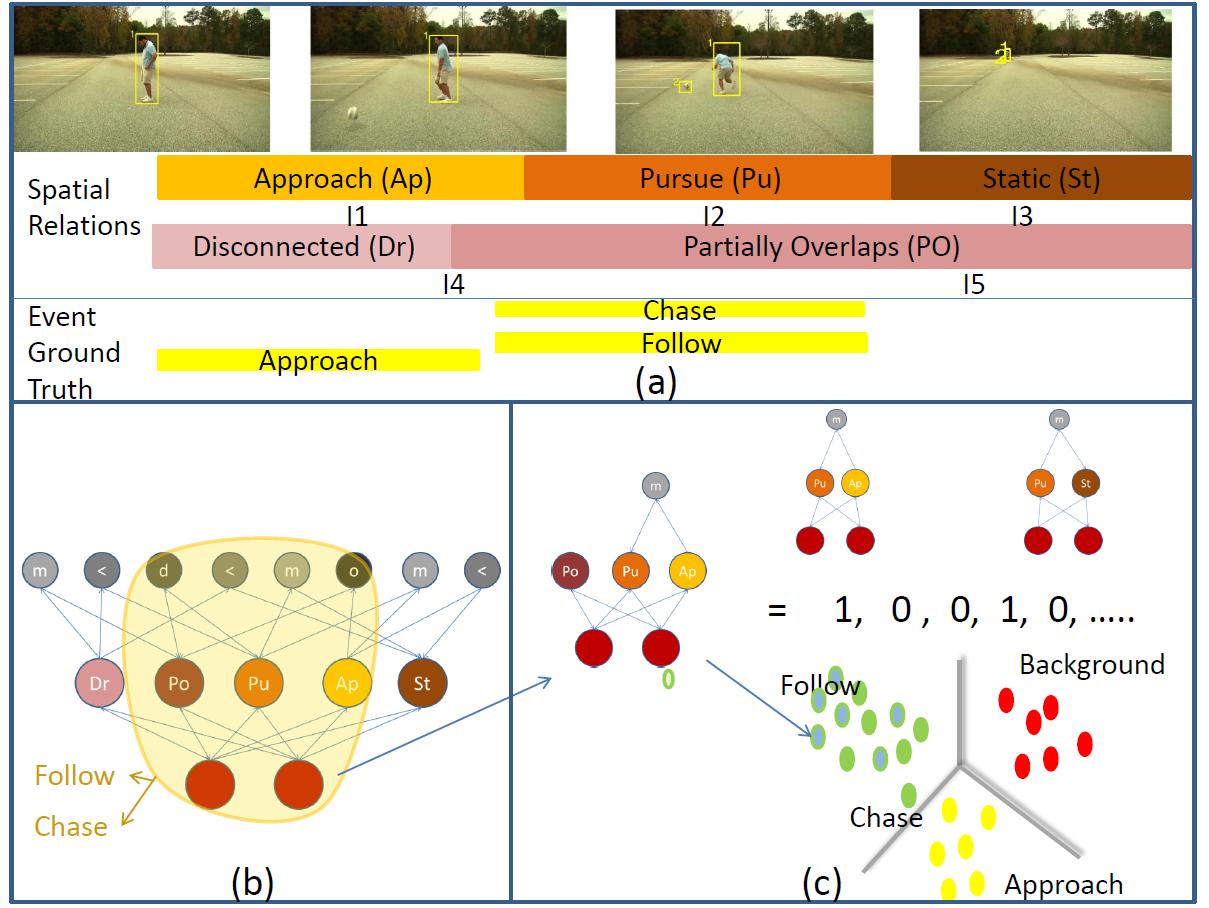

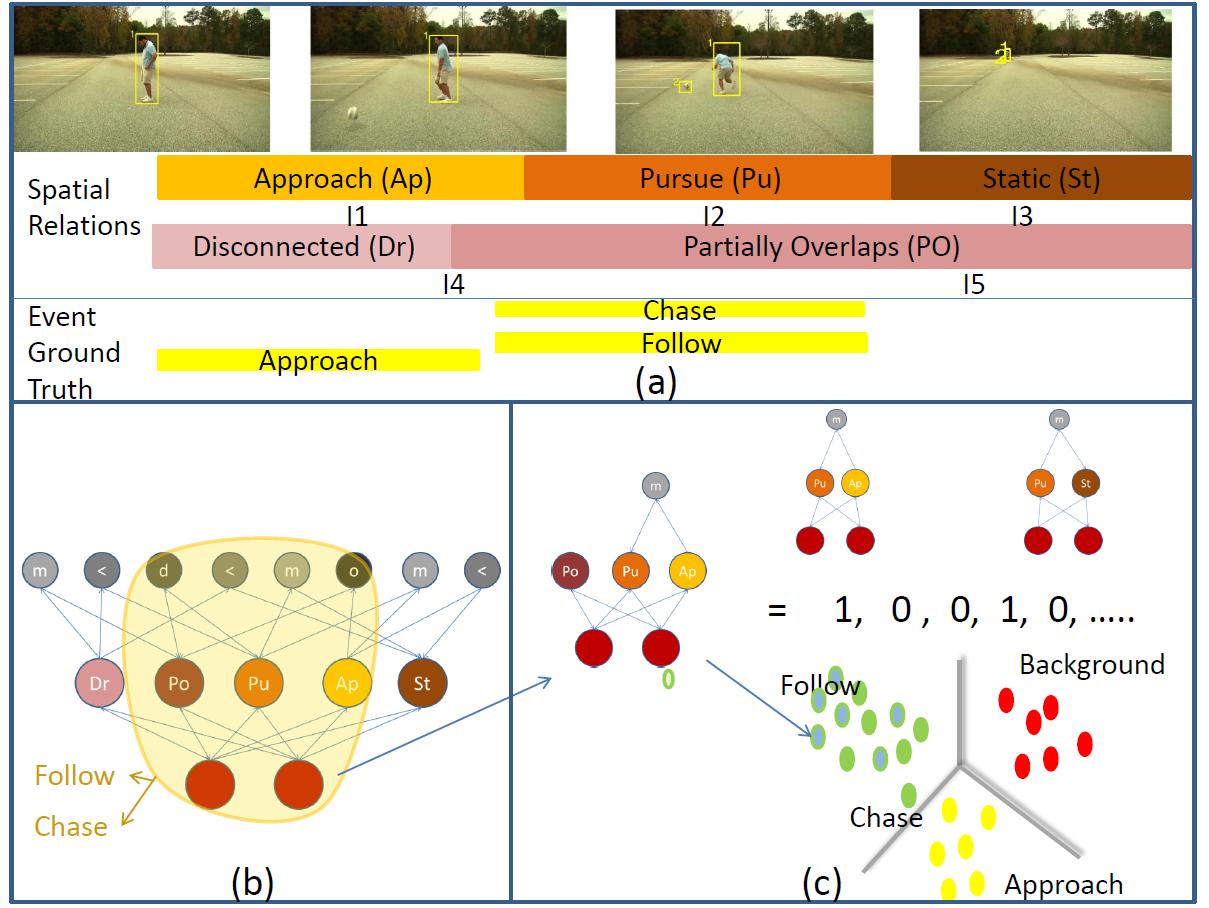

We describe a novel approach, where interactions in a video are represented using an activity graph. The activity graph embodies all the interactions i.e. all the qualitative spatiotemporal relations between all pairs of interacting co-temporal object tracks during the entire video, as well as other properties such as pre-computed `primitive events' and object types. Interactions between subsets of objects or those involving only a subset of spatial relations are captured by interaction sub-graphs of an activity graph. Learning involves using sub-interaction graphs that are labelled in the ground truth with corresponding event label(s), in order to learn event models for each event under consideration. Given an unseen video that we represent as a corresponding activity graph, the task of event detection corresponds to finding the most probable covering of the activity graph with sub-interaction graphs, given a learned event model. Each detected interaction sub-graph is mapped back to the video in the form of event detections, where an event detection corresponds to an interaction by a subset of objects during certain time intervals, together with their respective event label(s).

Muralikrishna Sridhar, Anthony Cohn, Hogg David C. "Unsupervised Learning of Event Classes from Video" American Association for Artificial Intelligence

(AAAI) Atlanta, Georgia, 2010.

|